When to quit on a product idea

Knowing when to quit is easiest at the extremes.

If conversion rates are high, your messaging is clicking with each new prospect, and people are quickly signing up to be customers - then it’s obvious you should keep going.

Conversely, if you have zero interest then it’s obviously time to move on.

But what do you do about the messy middle? That grey area that 90% of product ideas fall into. You’re getting some interest, your marketing efforts are sort of working, but it just feels like a slog?

That’s the area I’ve been stuck for my product ChartJuice, basically since it’s inception. I was getting just enough positive signals that it felt like I should keep going, but meaningful traction was slow and hard to come by.

I recently made the decision to put ChartJuice firmly on the back-burner, and start focusing on a new product (more on that later). I thought it would be helpful to write about the factors that led to my decision.

My goal is to start to build a more concrete framework for answering the question, “when do you quit on an idea and when do you keep going?” Some people talk about product sense, the ability built over time to intuitively answer this question. But that concept isn’t particularly helpful when you’re still building it.

Per usual, these thoughts are specifically for founders building a bootstrapped business, although some of these tests should be universal.

Have you disproved all of your hypotheses?

I was talking to my friend Andy Seavers, a serial entrepreneur and CEO of CaseStatus, about the lukewarm response I was getting to ChartJuice and he gave me some great advice.

He said, “it’s all about, ‘What is that core hypothesis?’ that needs to be proven true, and being real with yourself on whether or not it gets proven false early or continues to NOT be proven false (you get little proven true proof till product market fit).”

There are two things I love about this:

- Validating an idea feels like this big, vague, confusing task. But if you break it down into the core hypotheses that need to be true for the business to work, it’s easier to define experiments and goals to test those.

- Focusing on whether a hypothesis continues to NOT be proven false, rather than requiring it to be proven true is a more realistic depiction of the uncertainty you’re always facing in early stage startups.

Jason Cohen calls this the “the Iterative-Hypothesis customer development method.” In every user interview or customer call, “your mindset should be: “What does this person know, that invalidates something I thought was true?”

Andy gave me an example from a failed product he worked on called Taste, a Yelp competitor. “With Taste, the alpha hypothesis was that the solution worked and it was fun. That continued to not be proven false (maybe true). Our second milestone was two hypotheses:

- The problem is real enough for people to want to solve with technology (pretty standard SaaS hypothesis).

- The solution could be shareable (virality coefficient aka K-Factor).

I believe we proved both to be false. It was too hard to explain to people why it was better, and people only think they want to know where the best places are, but they would rather have confirmation bias from a review telling them that they are going to have the experience they want to have.”

After my early validation work on ChartJuice I had a couple of hypotheses:

- The problem of adding charts to product analytics emails is real enough that Product teams will pay for a chart-to-image API.

- A subset of the users searching for general search terms like “chart builder” (which had the highest search volume) are looking for a chart-to-image API like ChartJuice.

Once I built the product and started running ads, I disproved the second hypothesis pretty quickly. I could clearly see that the users signing up weren’t using the product much, and weren’t in roles that would make sense for a chart-to-image API.

I revised my hypothesis and narrowed my keyword targeting, but still saw basically the same results.

Also of the two most promising leads I had from my early validation one had found a new solution that they felt was “good enough” by the time I had an MVP built and the other continued to have other higher priorities that kept them from trying the tool every time I checked in.

I tried a round of cold email to product managers as well, with no positive indicators (although my emails certainly could have been stronger). So it felt increasingly like my core hypothesis was being disproved as well.

Make sure your idea passes basic market heuristics

I’ve written before about market size heuristics for indie founders and how market size matters even if you’re bootstrapped. While a small market isn’t a guarantee that your product idea won’t be successful, it is a potential red flag and will impact how you should think about sales, marketing, and pricing.

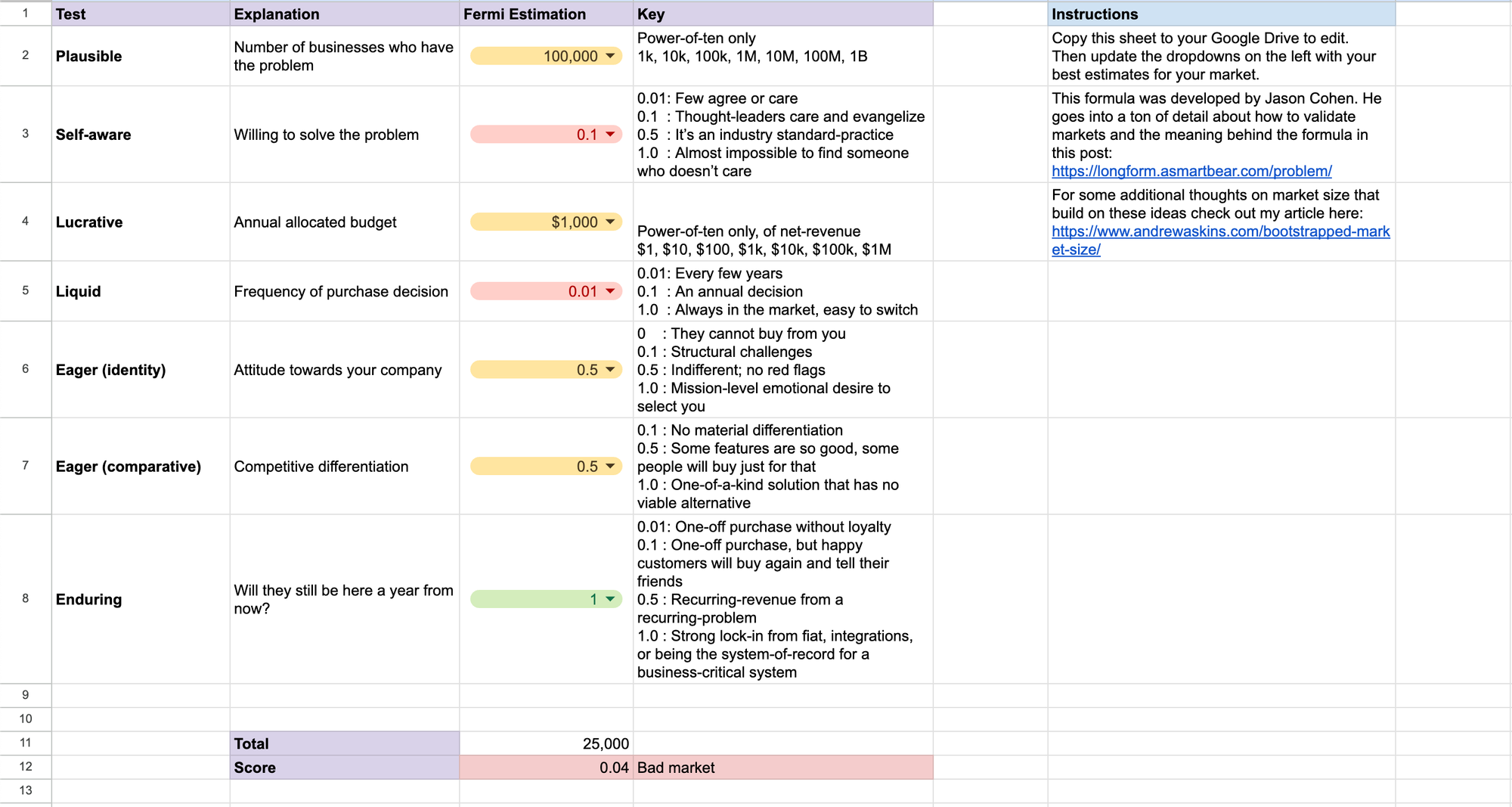

Thinking about your market goes beyond just size too, Jason Cohen has 6 factors that make up a great market:

- Plausible - Big enough

- Self-aware - Know they have a problem

- Lucrative - Have budget

- Liquid - Willing and able to buy today

- Eager - Willing to buy from you

- Enduring - Will stick around

If your market fails enough of these factors (which you may need to determine or verify through iterative-hypothesis customer research) then it may be worth moving on.

The chart-to-image API use case for ChartJuice failed on several of these factors as well, and didn’t look like a great market. The market was small (not plausible) if I was just selling to software companies, and most importantly users would go years between needing to redesign their analytics emails (not liquid).

The numbers looked a little better for a freemium, consumer/prosumer focused use case where ChartJuice is a Canva-style chart builder that works for anyone. But that use case comes with its own challenges. Much lower price points, high churn, and much higher competition.

Is user feedback converging or diverging?

In his course the SaaS Launchpad, Rob Walling talks about finding patterns in user research. If you are struggling to identify common problems and patterns between users (or if the pattern is they aren’t interested) then you might need to move on.

Jason Cohen expands on this idea in his blog post, “The ‘Convergent’ theory of finding truth in darkness.” The basic idea is that with good ideas, user research tends to converge. You find people are saying the same things about how painful the problem is, how they’ve tried in the past to fix it, the value of fixing it, etc. But with bad ideas, everyone is telling you something different.

He talks about validating a startup idea before WPEngine. Initially, everyone told him the idea was great. But he got conflicting information once he dug deeper.

“One [person] said I should target enterprises, charging $1000/mo and selling through consultants. One said I should make it freemium and figure out how to make money converting 5% to $5/mo. Another said charge a minimum of $50/mo to cut out the moochers who email support but don’t pay for stuff.”

Looking back, the feedback I got from the users I talked to diverged more than converged. One friend told me I should sell directly to ESPs, another told me I should charge a $3k setup fee and offer a white glove setup service. Everyone I talked to used a different ESP and kept their data in a different place. Some people were two years in and still didn’t have transactional emails setup, others had already built them 6 months in.

Contrast that with the new product I’ve been researching. Every user mentions the same 3-5 tools, they all follow a similar process for solving the problem today, and they all say they’d pay similar rates depending on their usage.

I will say, don’t give up too fast if you don’t see this convergence. It may just mean you haven’t found your ideal customer profile yet. It took us several interview to start talking to the right people, and that’s when we started seeing this convergence. My advice is to do at least 10-15 interviews to start. If you still aren’t seeing convergence, either change your ICP or mark it as a red flag.

Are you out of experiment ideas? Or motivation?

At the end of the day we’re all human. If you’re out of ideas for how to make your product work or just out of motivation to keep trying, it’s okay to quit. The point of starting a business is to do things on your own terms. Yes, it’s going to be hard. But you shouldn’t make yourself miserable.

In his course, Rob asks students if they could see themselves working on this business for 5 years? Picking something you’re genuinely interested in and excited by will help you when motivation is low.

I’m not necessarily out of ideas for ChartJuice. I still think that a freemium model could work (even though freemium is usually a bad idea for boostrappers) and there are a number of integrations I’d like to build. And I could be happy working on ChartJuice for 5 years if the traction was there.

But my motivation was running low after working on it in some capacity for 8 months. So I put the product on the back burner for now, with plans to try a couple of the experiments I still haven’t tried when motivation comes back.

If you’re unsure whether you want to keep working on something, I recommend taking some time away before you quit entirely. That’s why I say I’m putting ChartJuice on the backburner rather than shutting it down entirely. Sometimes we just need a break. Of course, this is easier to do if the costs and maintenance needs of your product are low.

Know your personal patterns

As you’re making the decision of whether or not to quit, take a good hard look at your track record and your natural tendencies.

Part of the reason I kept pushing on ChartJuice for 8 months even when signals were lukewarm was that I know I’ve given up on ideas too early in the past. I’ve worked on products, run out of steam, and then seen similar products gain traction shortly after.

This time I wanted to push a little farther. I was also excited to learn to code again, and wanted to test my filter for the signals I was receiving.

Do you have a better option?

Ultimately, the biggest reason I decided to “quit” on ChartJuice (at least for now) is that I felt I had a better option.

I’ve started working on validating a new product idea, one I’ve been interested in for a long time, with a friend of mine. Early signals are much more promising. The market is plausible, self-aware, relatively lucrative, and liquid. I’ve been revising my hypotheses, and so far they have yet to be disproven. Feedback is converging. And I’m feeling really excited, and could see myself working on this for at least the next few years.

Now, it’s easy to take this last test to the extreme. Shiny object syndrome is something all entrepreneurs deal with.

When evaluating your options, you need to be brutally honest with yourself. Is the new thing really a better option? Or does it just seem better because it’s new and doesn’t have the baggage of your old idea?

When deciding whether to quit, ask these questions

So there are the questions I considered when deciding to put my project on the back-burner. There’s still a lot of ambiguity within these tests. Being a founder is all about making hard decisions with limited data.

But if you’re at the point where you’re thinking it might be time to move on ask yourself these questions. The answers won’t tell you to quit or not, but they may reveal red flags. It’s then up to you to decide what to do with those.

- How can you break your idea into smaller hyoptheses?

- Have you disproved all of your hypotheses?

- Does the market have the indicators of a bad market?

- Is use feedback converging or diverging?

- Are you out of experiment ideas?

- Are you out of motivation?

- Are you someone who quits too fast or too slow?

- Do you have a better option (and not just a shinier option)?

What I’m working on next

The new product I’m working on is called MetaMonster. MetaMonster is an SEO tool that helps agencies and freelances audit and cleanup client metadata in minutes instead of months. It combines traditional web crawlers with generative AI and native CMS integrations to automate tedious SEO work.

If you’re interested in giving it a try, check out our website and join our waiting list. Users on the list will get regular updates and get first access to the tool. And to jump to front of the line just reply to the welcome email and let me know you want to do a user interview.

I’ll also be writing about how I’ve validated MetaMonster. I used lessons learned from validating ChartJuice but pushed myself to really focus on user research this time and the results have been super encouraging.